How To Avoid The Top 10 Business Limiting Website Customer Experience Mistakes Made By Law Firms

INTRODUCTION

As websites and mobile apps have become so important to all businesses, an increased focus on the quality of customer experience of the company website will pay dividends.

Today, website visitors expect more as they compare you against the leaders from different sectors like Amazon, Facebook, HubSpot or Uber. Yet, often there are common flaws in how the design implemented supports your communications goals and the customers' journey.

This guide explores the most common mistakes and suggests how you can improve your approach.

Consider the growing importance of of CX within E-commerce, which is no longer just about selling; retailers are investing more in the tools, processes, data and people to personalise the customer experience.

Brands like House of Fraser have appointed Chief Customer Officers with board level ownership of the customer experience across the organization, and UX teams have expanded to become CX teams with disciplines including user research, behavioural analysis and UX design.

Research by Adobe and Econsultancy reveals agencies and companies both rate optimising the customer experience as the most exciting business opportunity:

Our mantra at Law Firm Markeitng Agency for managing all digital activity is to Plan, Manage, Optimize and this particularly applies to CX since it is all too easy to “just do it” without thinking about your business goals, customers and how best to deliver online content and services.

Mistake #1 Lack of Customer Insight Driving UX Design

Usability and UX are key components of customer experience. Understanding what users do and why, and translating that insight into high quality solutions that work seamlessly across devices, is the key to customer-centric site design.

How can you design a solution if you don't understand your target audience, their needs and motivations for using your website?

There is still a surprising lack of focus on customer insight, with many organisations reliant upon web analytics data (quantitative) but lacking a process to capture direct customer feedback (qualitative).

This compromises design because the output isn't necessarily aligned with user needs, creating friction in the process. A good example is form design for mobile devices; analytics could show good checkout or form conversion but user research reveals customer frustration as forms don't display the most appropriate keypad based on field type. For example: email field doesn't default to email keypad.

STRATEGY RECOMMENDATION: Conduct UX research to validate new updates

When introducing new or updating existing website features, conduct UX research to validate user needs and identify points of friction. This can be done using a variety of low-cost, scalable methods:

- Online survey to registered/opt-in customers (this allows you to ask qual and quant questions)

- Remote video testing using demographic targeting to get the appropriate sample - this is all remote unmoderated user testing, it allows you to see a users screen, whilst they work through tasks and give audio feedback

- User panel (typically comprises brand loyalists) - by creating a research panel of users, you can reach out to them to ask them feedback on the site

- Persistent feedback forms on the website such as website intercepts such as Hotjar and Intercom

Mistake #2 No Personalized Approach to User Journeys and Content

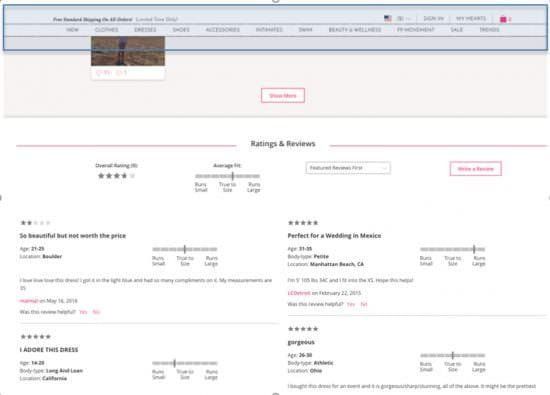

Online audiences are heterogeneous; they comprise a variety of different user types and behaviours. Many websites fail to recognize the differences between these users and provide a uniform website experience with the same content for everyone.

Personalisation has been shown to increase ecommerce conversion. According to research from Dynatrace, marketers report an average uplift in online sales of 19% from personalizing web experiences.

In our article on personalized product recommendations, we show how Millets increased conversion rates by up to 332% on some pages by promoting personalized product recommendations to customers.

Personalisation is highly relevant to B2B too, where different decision-makers have distinct needs. Let’s take the example of Sage selling accounting software to SMEs, where multiple people are often involved in the purchase decision:

- The end user is the accountant/finance person who will use the software and needs to know it enables them to do their job and satisfy compliance regulations

- The IT team needs to know the software aligns with their enterprise technology principles and can integrate with other relevant systems

- The procurement officer needs to know the pricing and product/service quality aligns with the business policy for supplier selection

A one size-fits all content strategy fails to address each audience’s unique needs and barriers to purchase. Personalization tailors content to suit each person and helps e-commerce teams tackle potential barriers in the user journey

STRATEGY RECOMMENDATION: Segment Your Customers

Find a way to segment your customers based on their demographic profile and/or browsing and purchasing behaviour, then tailor content and promotions to each audience.

#3 Failure to Understand Device Specific Behaviour

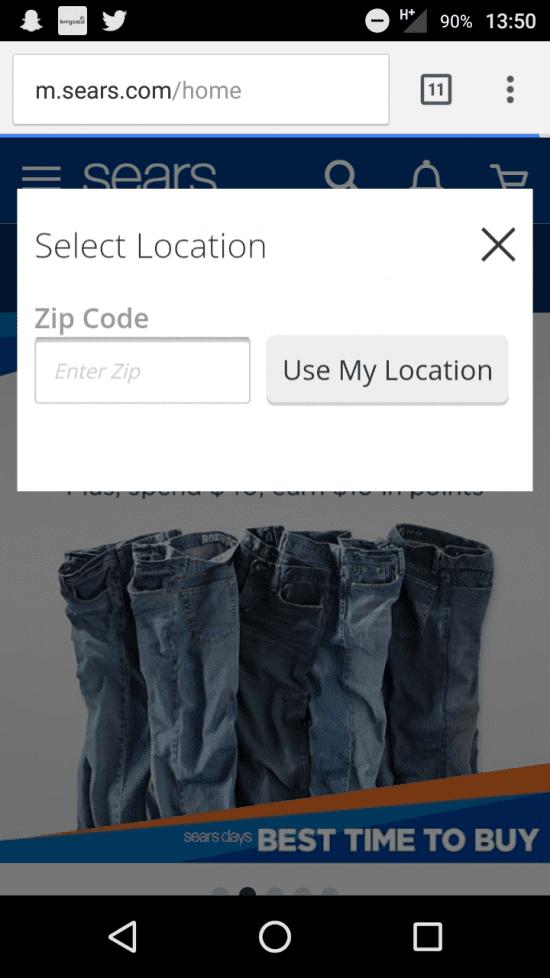

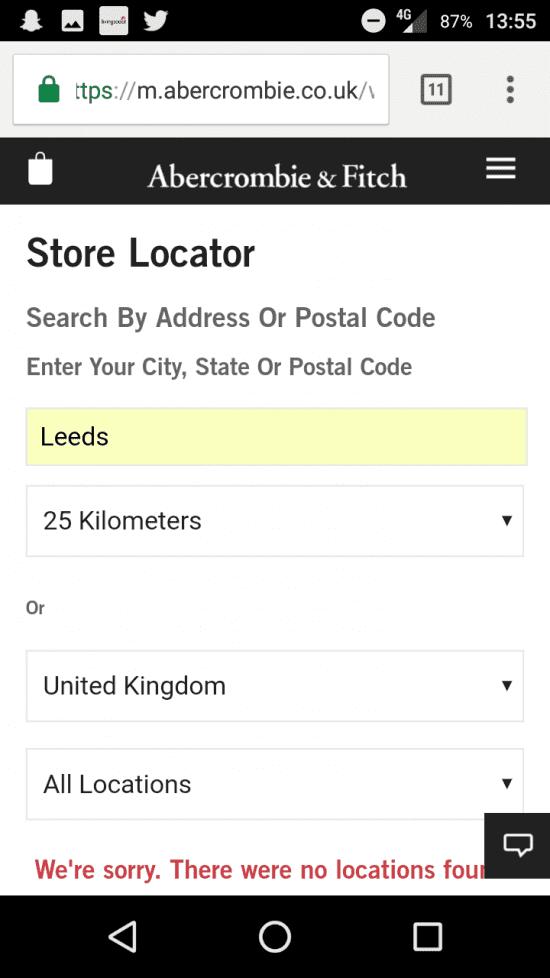

The tasks we perform and the way we use a website can vary across devices. For example, searching for local store information is a common mobile behaviour and predominantly this is carried out on smartphones.

The default pattern for store location is to enter an address and then retrieve a list of stores based on proximity. However, location detection on mobile devices is accurate and faster, reducing the cognitive effort for customers.

Compare Scotts Menswear and Lyle & Scott store finder services on a smartphone; Scotts has a persistent icon for the store locator in the site-wide navigation and enables location detection, whereas Lyle & Scott does neither (you can only get to the store locator by expanding ‘Customer Service’ in the footer and selecting ‘Find a Store’), meaning customers have to go to more effort to find the store.

To understand how people are using the website on different devices, you need to spend time analyzing browsing behaviour and using web analytics data to discover use journey flows and page engagement metrics. By doing this, you can segment based on device and isolate patterns that only apply to a specific device class.

STRATEGY RECOMMENDATION: Use Analytics For Customer Journey Analysis

Ensure you have your analytics tools configured to track different browsing activities, including:

- Event tracking for key actions like video views, downloads etc.

- Scroll and heat map tracking for page engagement

- Apply device class segmentation (desktop, mobile, tablet) and compare behaviours.

- Identify ‘pinch points’ per device class, where performance for this device class is significantly worse than for other devices.

Feed this back into the user research to get voice of customer feedback to understand why performance might be poorer, then use this insight to inform your design process to make changes that are tailored to the device usage. http://ow.ly/CsdU30qAU7y

#4 Not Creating Use Case Models to Prioritize Page Content

Use case models align with personas/segmentation. They build out a hierarchy of business requirements for each user type, with each use case rated using a consistent prioritization scoring, for example MoSCoW

(en.wikipediadotorg/wiki/MoSCoW_method).

Typically a use case looks like the following:

As a ______, I want to ______

For example:

As a customer, I want to search for a product I want to buy.

It uses a hierarchy with a high-level requirement (“I want to search for a product”), which then breaks down into more granular requirements (“I want to be able to search on any page”, “I want to filter my search results”, “I want to add to basket direct from the results page” etc.).

Below is an example of a basic use case model for an e-commerce wishlist from Digital Juggler:

Basic use case model for an e-commerce wishlist from Digital Juggler

By mapping all the use cases for a webpage or piece of content, you build a picture of what the design has to satisfy. By prioritizing use cases based on business/customer need and impact, you provide a clear brief to designers for which components must take precedence, helping ensure designs align with user needs.

STRATEGY RECOMMENDATION: Create a Simple Framework For Building a Use Case

Follow these steps:

- Define the actors for the new development e.g. customers, admins etc.

- Map high-level use cases to each actor e.g. As a Customer, I want to _____

- For each use case, break this down into more granular requirements

- Agree your prioritization criteria (ensure it’s robust and classifications are distinct and objective i.e. don’t make everything a ‘must have’!)

- Rank each use case based on these criteria

- Agree an ‘MVP’ view – what are the minimum use case expectations to be able to launch this?

- Brief the use case model into the UX team. http://ow.ly/cVdU30qAU7E

#5 Inefficient Digital Asset Management Impacting Page Speed

Page speed is an important component of CX. Not only do slow loading pages reduce customer satisfaction, they also impact conversion, as bounce rates tend to increase as page speed slows down. Furthermore, Google has made page speed an integral part of its mobile algorithm, so poorly optimized mobile pages will send negative quality signals.

Below are common problem for e-commerce sites:

- Failing to serve assets appropriate to the device requesting them g. images not optimized for web, where the file size is larger than required

- Relying on the browser to resize images – the browser still has to load the full image, then check the dimensions you want and resize it locally

- Serving large files to slow connections; assets like video files degrade on slow connections, so you should use device detection to determine when it’s appropriate to serve the content

On mobile every millisecond counts, so even images that are adding 100kb will have a significant impact on performance.

There are several free tools you can use to measure your page performance and compare webpages on your site and versus competitors (see below). More advanced monitoring can be achieved using paid tools like New Relic, Dynatrace and Stackify.

STRATEGY RECOMMENDATION: Select a page speed testing tool and set-up a regular process for page speed monitoring.

Select a page speed testing tool and set-up a regular process for page speed monitoring.

Then follow these steps:

- Export the data into Excel and create a page speed chart

- Monitor the chart daily to identify any sudden peaks and troughs

- Liaise with your webops/dev team to run diagnostic checks on poor performing pages

- Identify potential issues and create tickets to resolve them

- Establish a process of page speed monitoring around new releases (minor and major) to measure impact of site changes on performance. http://ow.ly/IKo130qAU7H

#6 Using Site Wide Rather Than Page Centric UX Patterns

A classic UX mistake is to create a design pattern that is intended to improve the customer experience and help support business goals, but then apply it uniformly across the site instead of thinking how it needs to adapt based on page level use cases.

Take the example below comparing pinned headers on product pages for Ao.com and Freepeople.com. AO recognizes that the customer is now on a product page and the focus is on persuading them to add to basket, whereas Freepeople displays a generic site wide header with no contextually relevant information.

AO navigation geared to add to basket on product page.

It’s important to consider contextual relevance in UX design, ensuring that content components are designed appropriate to the context of their use i.e. where are they being used in the user journey and what is most helpful to the customer?

STRATEGY RECOMMENDATION: Ensure you design use cases

Before applying a new UX pattern to your website, review which pages it’s applicable to and do the following

- Create a use case model for each page – how will users want to use this pattern on each page?

- Compare the use cases to your default version – does it satisfy all pages?

- Where there is a gap between the default UX and the page level requirement, adapt the design to align

- Ensure tracking is in place to measure customer behaviour and then compare performance across each page type.

#7 Not Ensuring Web Analytics Can Track Page Level Success Metrics

To know whether or not a website is delivering a high quality of customer experience, you need to have a set of KPIs to measure performance.

However, out the box web analytics installations typically have measurement gaps. They’ll be adept at measuring session data but won’t be configured to capture specific page interactions, for example capturing video plays and whitepaper downloads.

If we take the example of a download, typically this occurs via a landing page with a short form and ‘Download’ CTA button. Default analytics will capture the landing page URL sessions, and in-page analysis will show sampled page interactions, but there won’t be accurate tracking of field entry, form completion and submission.

If the form has a high abandonment rate, why is this? If we know which field is driving the most exits, or returning a high volume of errors, we can start to understand why performance is poor.

This is where analytics configuration helps improve your measurement of customer experience. By using techniques like event tracking, you can capture page interactions for non-standard elements, as well as using standard reports for metrics like time on page, bounce rate and % exit.

STRATEGY RECOMMENDATION: Set granular KPIs for specific site pags and user journeys.

You can make usability/UX a measurement dimension of everything. Let's take the example of site search:

- Search depth

- Click through rate (CTR) from search results

- # zero results searches

- Bounce rate for search results page

- Conversion rate from search sessions

These measures build a picture of how successful search is at engaging and converting customers.

Once you've defined the KPIs you want to measure, ensure your web analytics tools are configured to capture this data and present it in an accessible format. http://ow.ly/nOMj30qAU7Q

#8 Failure To Regularly Analyse Performance Based on Success Metrics

Once you’ve established the KPIs to measure performance against, you need a process in place to ensure these are regularly analysed. This doesn’t mean generating a report and thinking ‘job done’; reporting is not analysis!

It means agreeing a reporting cycle but ensuring that each time the report is generated, somebody is interrogating the data to ask ‘why?’ when KPIs change. For example, when measuring goal completion rate for email sign-up, if the completion rate increases sharply week-on-week, what analysis is done to understand what has caused this?

A common mistake is submitting business reports, flagging good and bad performance but having no explanation of what has happened or data to validate the change.

STRATEGY RECOMMENDATION: Define a regular review process

Put a process in place to analyse KPI reports and provide data-driven insights into why performance is changing. Consider the following:

- Appoint an owner for each report

- Ensure there is an experienced web analyst supporting the report owners

- Agree variance thresholds for each KPI above/below which analysis is required

- When a KPI exceeds the threshold, report owner runs an initial diagnostic (triage)

- If the report owner can’t explain the change, escalate to the web analyst for deeper analysis & interrogation

- Include a section in each report for ‘Business insight & learning’ – once analysis is complete, attach findings and summarise key learning for the business.

By adopting this process, your trading meetings will become more productive because performance fluctuations can be explained using data and learning from the analysis can be shared across the organization.

#9 Lack of Investment in Tools to Measure Usability and UX

If you don’t have budget available to invest in the right tools, your customer experience analysis will be compromised. We’ve seen organisations happy to spend £thousands on paid media but baulk at hundreds of pounds for UX software tool licenses.

There is a limit to what your basic web analytics tool can achieve. If you have an enterprise tool like Adobe, you may already be using integrations with session replay tools like Clicktale, but as most organisations are reliant upon the free version of Google Analytics, this type of advanced feature isn’t available.

To build a complete picture of customer experience, you need to be able to measure and analyse your customer’s behaviour throughout the website. This requires multiple data sets:

- Behavioural - what they do, when and where

- Emotional - why they do things, what they like and dislike and why

- Functional - what they click on, what paths they follow, where they exit

- Rational - how they respond based on what the website presents them

To help you build this picture, you need to use multiple tools that help answer different questions:

- What are people doing? > web analytics session and user ID data

- What are their browsing paths and barriers? > session replay, scroll and heat mapping

- Why are they doing it? > customer surveys, video testing

#10 Not Applying CRO Techniques To Improve Results

By applying the principles listed above, you should be evolving towards a customer-centric approach to e-commerce design where you better understand what customers want/need, and how to deliver this across devices.

But don’t think your work is over yet!

This is just the start. A common mistake e-commerce teams make is to launch new designs and then move on to the next project. As long as the KPIs are positive, the risk is to think it’s working well.

However, you should apply the core principle of conversion rate optimization (CRO) to all site development; the only way to optimize performance effectively is to interactively test your design to discover how you can further improve results.

You need to embed a culture of test + learn to ensure that you optimize your website to learn what works best. This includes testing:

- What brand messages to communicate

- What service elements to promote

- How to use reassurance and persuasion messaging How to promote product

- What content to use and how much of it

- How to be creative

If you don’t understand the problem, how can you fix it properly?

Example scenario:

- Client exit intent campaign suddenly drops revenue by 48% WOW

- The number of times the overlay has been triggered has fallen significantly

- Engagement rate with the overlay has dropped by 28%

- Emotional reaction = something wrong with the campaign!

But after some digging in the analytics data:

- Traffic on the website is down – so fewer sessions for exit intent

- New overlay versions were added, these aren’t performing

- Underlying performance of old overlays is stable – it’s AOV that’s hit revenue the most

- This is in-line with the overall site performance

Conclusion:

To improve big numbers, we need to look beyond the campaign; to improve small numbers, we need to optimise the new designs. http://ow.ly/PnKM30qAU7Z

SUMMARY

WATCH. LISTEN. READ. LEARN

Top 2025 Digital Marketing Tactics For Black-Owned Law Firms

5 Reasons Why Black Lawyers Aren't Maximizing Facebook and IG Ads

Black Lawyers Can 2x Their Website Leads With Attorney-Generated Videos

5 Website Design Mistakes That Black Lawyers Are Making

5 Facebook Ad Tips To Help Black Bar Associations To 2x Memberships

5 Google Ad Tips To Help Black Lawyers Double Their Website Leads

Black Law Firm Marketing Agency | Helping Black Lawyers Since 2020

5 Facebook Ad Tips To Help Black-Owned Law Firms 2x Website Leads

Black-Owned Personal Injury Law Firms Need To Avoid These 5 Mistakes

QUICK LINKS

LOCATION

1300 Cornwall Road

Unit 201

Oakville, Ontario

L6J 7W5

(Toll Free) 1-888-725-5045

(Local) 289-401-4041

HOURS OF OPERATION

Monday - Thursday

9:00 am - 5:00 pm

Friday 9:00 am - 4:00 pm

Saturday CLOSED

Sunday CLOSED

© 2025

Black Law Firm Marketing Agency | A Division of Remnant Digital Consulting Inc.